-

How to Install Postgres on Ubuntu 20.04

This post is a short note on how to install Postgres on Ubuntu 20.04.

I’m doing this in WSL, however this shouldn’t make any difference if you’re on the same Ubuntu version.

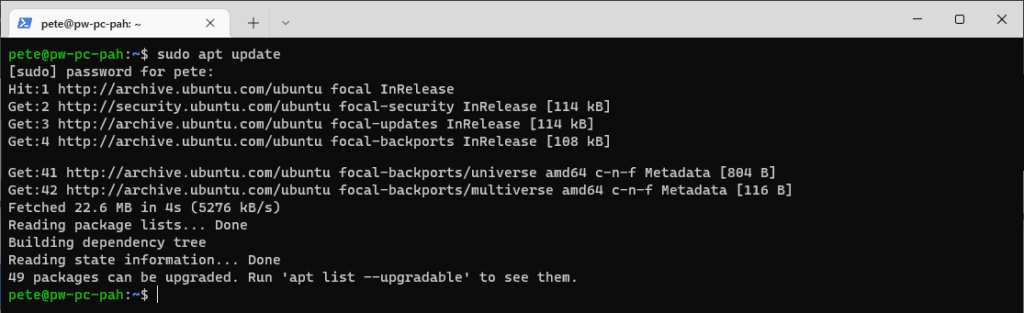

First, lets update our local packages by running the following apt command –

# update local packages sudo apt update

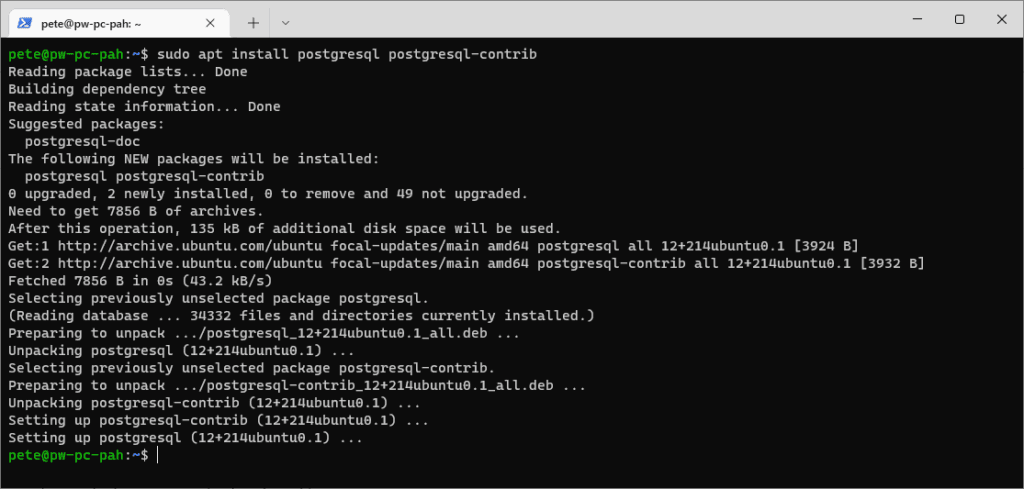

This Postgres installation is very quick and simple.

The following command is also including the ‘-contrib‘ package which gives us extra functionalities.

# install postgres on ubuntu sudo apt install postgresql postgresql-contrib

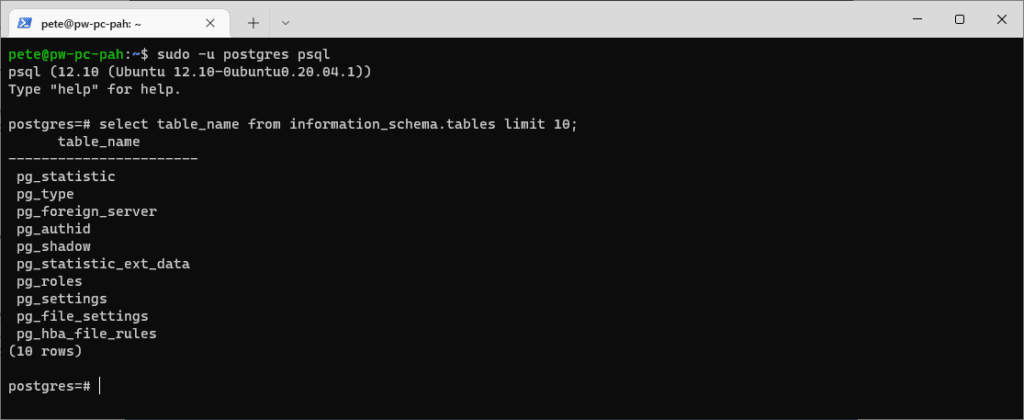

Once the installation completes, we can enter psql using the postgres user created automatically during the install.

# login to psql with the out of box postgres user sudo -u postgres psql \q (to quit back to terminal)

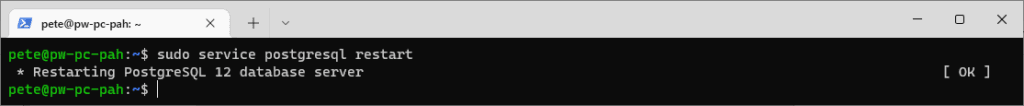

There may be a need to restart the services post install which can be done by running the following.

# restart postgres service ubuntu sudo service postgresql restart

-

How to Manage S3 Buckets with AWS CLI

This is a post on how to help manage S3 Buckets with AWS CLI, and to help explain some local Operating System (OS) considerations when running such commands.

First of all, you will need to be authenticated to your AWS Account and have AWS CLI installed. I cover this in previous blog posts:

> How to Install and Configure AWS CLI on Windows

> How to Install and Configure AWS CLI on UbuntuI’m more often involved in the PowerShell side rather than Linux. AWS CLI commands do the same thing in both environments, but the native (OS) language is used around it for manipulating data for output and for other things like wrapping commands in a For Each loop. All commands in this post can run on either OS.

PowerShell is cross-platform and has supported various Linux & DOS commands since its release. Some are essential for everyday use, for example,

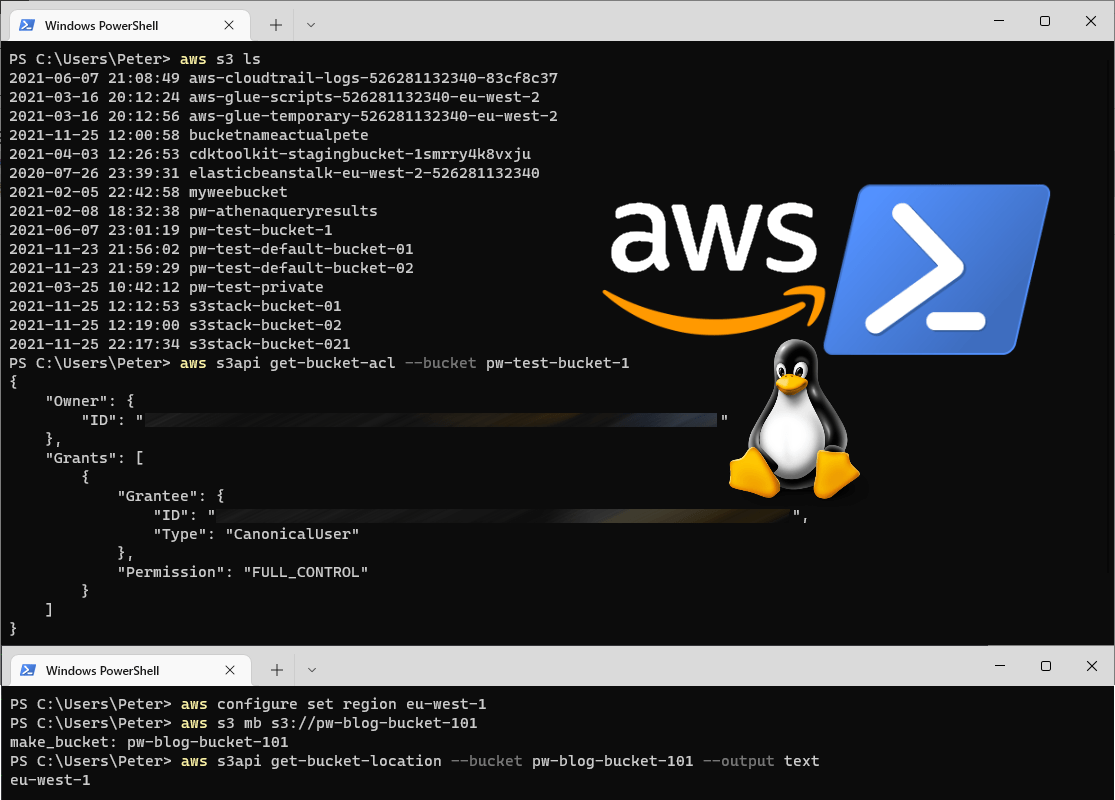

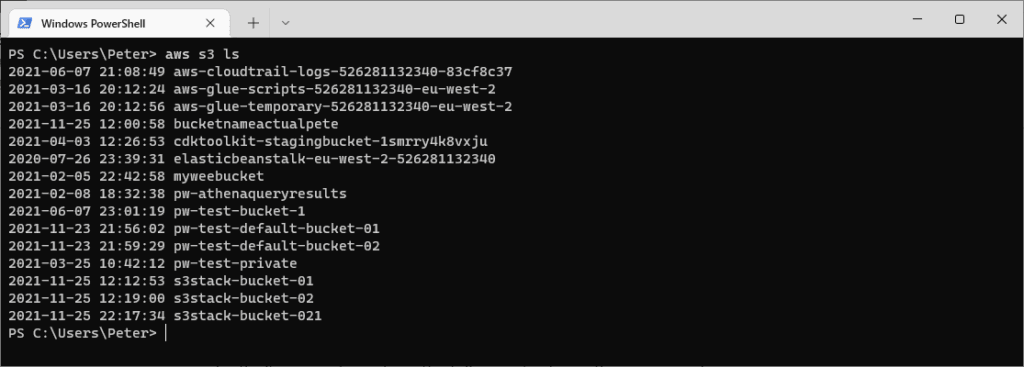

ping, cd, ls, mkdir, rm, cat, pwdand more. There are more commands being added over time like tar and curl which is good to see. Plus, we have WSL to help integrate non-supported Linux commands.Here’s one of the simplest examples which list all S3 buckets the executing IAM User owns within your AWS Account.

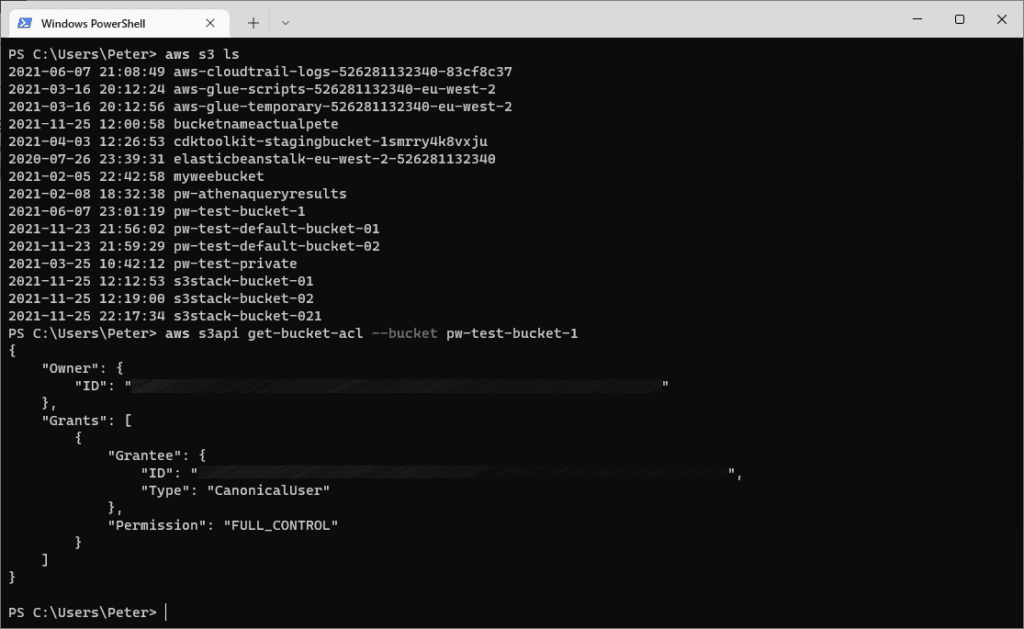

# List all buckets in an AWS Account aws s3 ls

The Default Region is configured during the AWS CLI Configuration as linked above. We can change this by running aws configure set region or configure your Environment Variables. Alternatively, we can pass in the –Region variable after ‘ls’ in the command to get a specific Region’s S3 Buckets. There are more ways for us to run commands across multiple Regions which I hope to cover another day.

Now I’m going to run a command to show me the Access Control List (ACL) of the bucket, using the list of Bucket Names I ran in the previous command. This time, I’m using the s3api command rather than s3 – look here for more information on the differences between them. When running AWS CLI commands these API docs will always help you out.

# Show S3 Bucket ACL aws s3api get-bucket-acl --bucket my-bucket

Next up, I’m going to create a bucket using the s3 command rather than s3api. The reason I’m doing this is, I want to rely on my Default Region for the new S3 Bucket, rather than specifying it within the command. Here’s AWS’s explanation of this –

“Regions outside of us-east-1 require the appropriate LocationConstraint to be specified in order to create the bucket in the desired region – “

–create-bucket-configuration LocationConstraint=eu-west-1

AWS API DocsThe following command is creating a new S3 Bucket in my Default Region and I’m verifying the location with get-bucket-location afterwards.

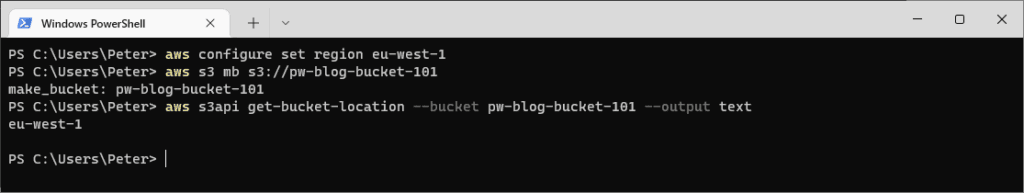

# Change AWS CLI Default Region aws configure set region eu-west-1 # Create a new S3 Bucket in your Default Region aws s3 mb s3://pw-blog-bucket-101 # Check the Region of a S3 Bucket aws s3api get-bucket-location --bucket pw-blog-bucket-101 --output text

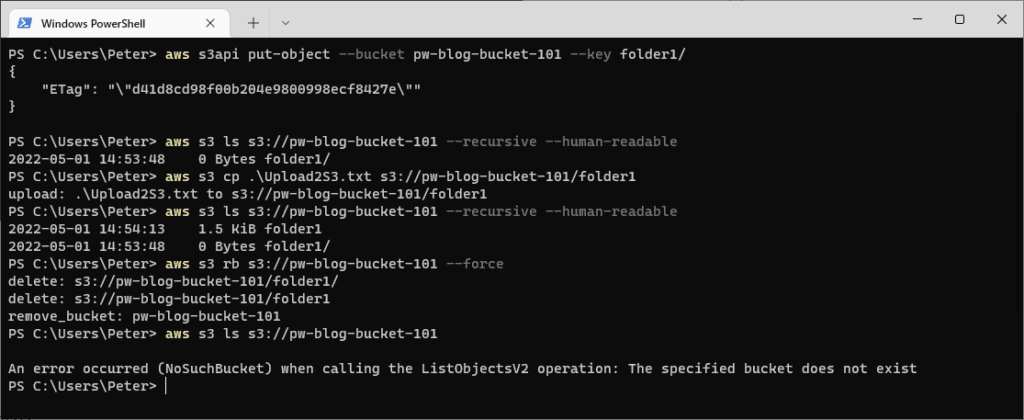

And finally, to finish this off I’m going to:

– Create a folder (known as Object) within the new Bucket.

– List items in the S3 Bucket.

– Copy a file from my desktop into the folder.

– List items in the S3 Bucket.

– Delete the Bucket.# Create folder/object within a S3 Bucket aws s3api put-object --bucket pw-blog-bucket-101 --key folder1/ # Show objects within S3 Bucket aws s3 ls s3://pw-blog-bucket-101 --recursive --human-readable # Copy a local file into the folder above aws s3 cp .\Upload2S3.txt s3://pw-blog-bucket-101/folder1 # Show objects within S3 Bucket aws s3 ls s3://pw-blog-bucket-101 --recursive --human-readable # Delete the S3 Bucket aws s3 rb s3://pw-blog-bucket-101 # List the S3 Bucket above (expect error) aws s3 ls s3://pw-blog-bucket-101

-

Managing Old Files with PowerShell

Managing disk space is a common task for IT professionals, especially when dealing with folders that accumulate temporary or outdated files. Using simple PowerShell Script, we can quickly check and delete files older than a given date.

In this post, I’ll cover:

Reviewing Files for Cleanup: Generate a count of files older than 30 days, grouped by folder.

Deleting Old Files: Automatically remove files older than a specified number of days, with an output showing the count of deleted files per folder.1. Reviewing Old Files for Cleanup

This script will help you identify and review old files by counting how many files are older than 30 days in each folder within a specified directory.

PowerShell Script: Count Old Files by Folder

# Script: Count Old Files by Folder # Set path to the root folder, and max age params $rootFolder = "C:\myfolder" $maxAge = 30 # get old file info $cutoffDate = (Get-Date).AddDays(-$maxAge) $report = Get-ChildItem -Path $rootFolder -Directory | ForEach-Object { $folder = $_.FullName $oldFiles = Get-ChildItem -Path $folder -File | Where-Object { $_.LastWriteTime -lt $cutoffDate } [PSCustomObject]@{ FolderPath = $folder OldFileCount = $oldFiles.Count } } # Output the report $report | Format-Table -AutoSizeOutput Example

FolderPath OldFileCount C:\myfolder\logs 25 C:\myfolder\temp 10 We can use this information to then verify what’s old within these directories.

2. Deleting Old Files with a PowerShell Script

Once you’ve reviewed the old files, this script can delete files older than a specified number of days. This script also outputs a count of deleted files per folder, helping you track cleanup progress.

PowerShell Script: Delete Old Files with Count

# Script: Delete Old Files with Count # Set the path to the root folder you want to clean up $rootFolder = "C:\myfolder" # Set the maximum age of the files in days $maxAge = 30 # Calculate the cutoff date $cutoffDate = (Get-Date).AddDays(-$maxAge) # Loop through each subfolder and delete old files Get-ChildItem -Path $rootFolder -Directory | ForEach-Object { $folder = $_.FullName $oldFiles = Get-ChildItem -Path $folder -File | Where-Object { $_.LastWriteTime -lt $cutoffDate } $fileCount = $oldFiles.Count # Delete the files $oldFiles | Remove-Item -Force # Output the folder path and count of deleted files [PSCustomObject]@{ FolderPath = $folder DeletedFileCount = $fileCount } } | Format-Table -AutoSizeNotes:

Safety First:

Test both scripts in a non-critical environment before running them on production data.Recycle Bin:

Files deleted withRemove-Itemare permanently removed, not sent to the recycle bin.Customizable Parameters:

You can adjust$maxAgeand$rootFolderto fit your requirements.Feel free to check out my other post, Advanced File and Folder Creation with PowerShell which includes more info on using PowerShell cmdlets to manage files!

-

Using Get-EventLog in PowerShell

The

Get-EventLogcmdlet allows you to view event logs directly in your PowerShell terminal, similar to using Event Viewer.Below are some quick examples to get started:

1. List Available Event Log Types

2. Show Events by Count

3. Filter Events by Message

4. View Full Message of an Event1. List Available Event Log Types

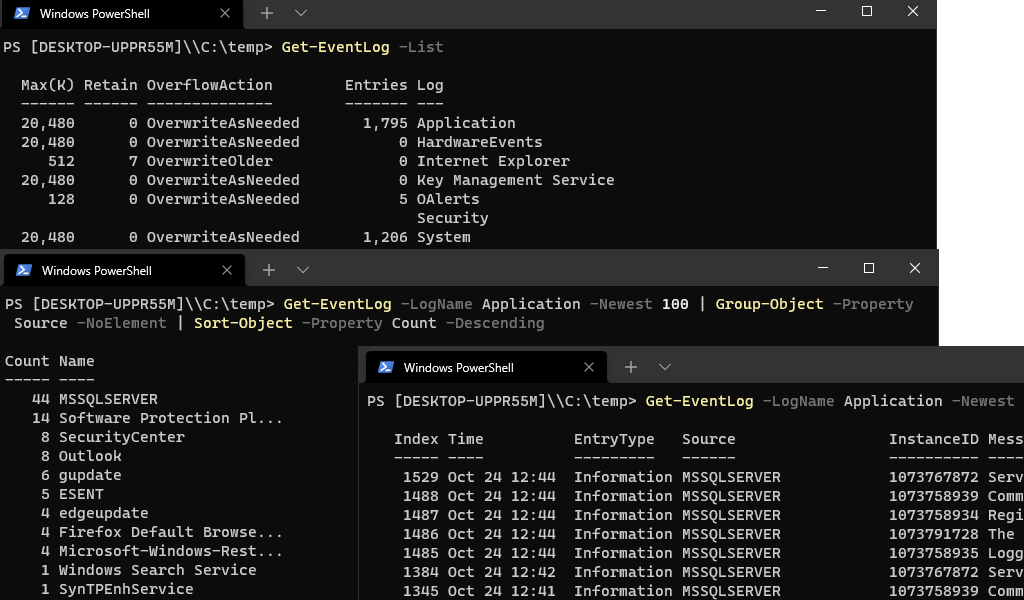

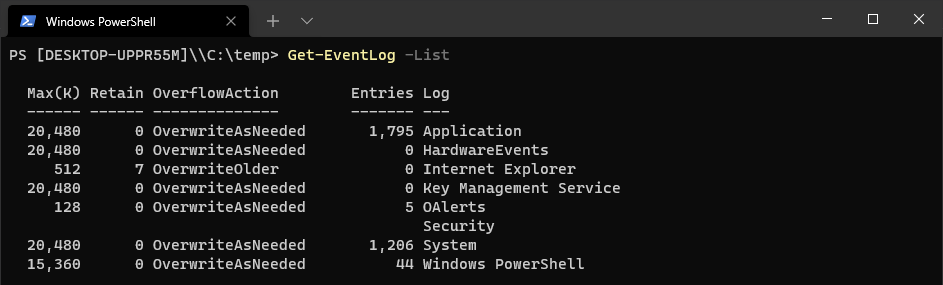

# Get List of Event Logs Available Get-EventLog -List

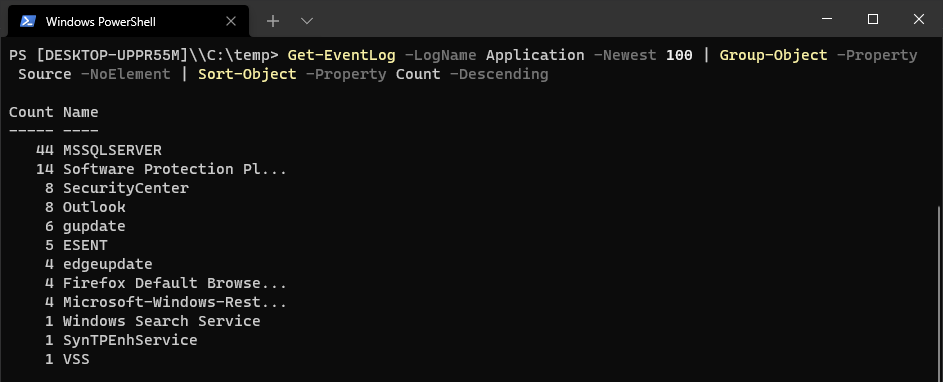

2. Show Events by Count

# Show Events by Count Get-EventLog -LogName Application | Group-Object -Property Source -NoElement | Sort-Object -Property Count -Descending

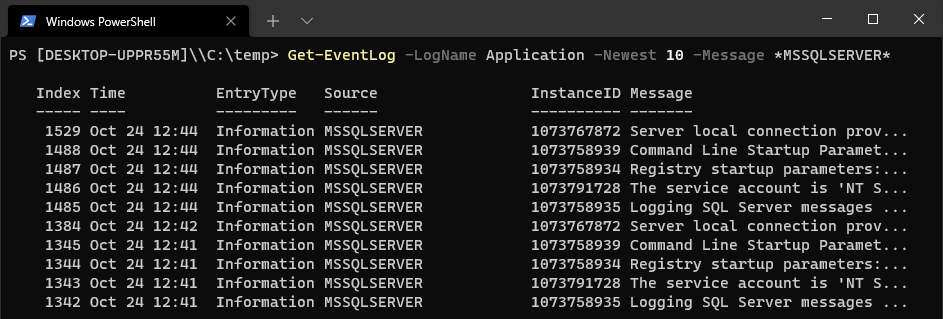

3. Filter Events by Message

Retrieve the 10 newest events containing “MSSQLSERVER” in the message:

# Show Events by Message Name Get-EventLog -LogName Application -Newest 10 -Message *MSSQLSERVER*

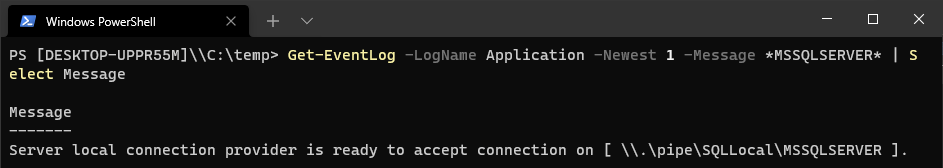

4. View Full Message of an Event

Display the full message of the most recent event containing “MSSQLSERVER”:

# Show Full Message of an Event Get-EventLog -LogName Application -Newest 1 -Message *MSSQLSERVER* | Select Message

Hope some of this was useful for you!

-

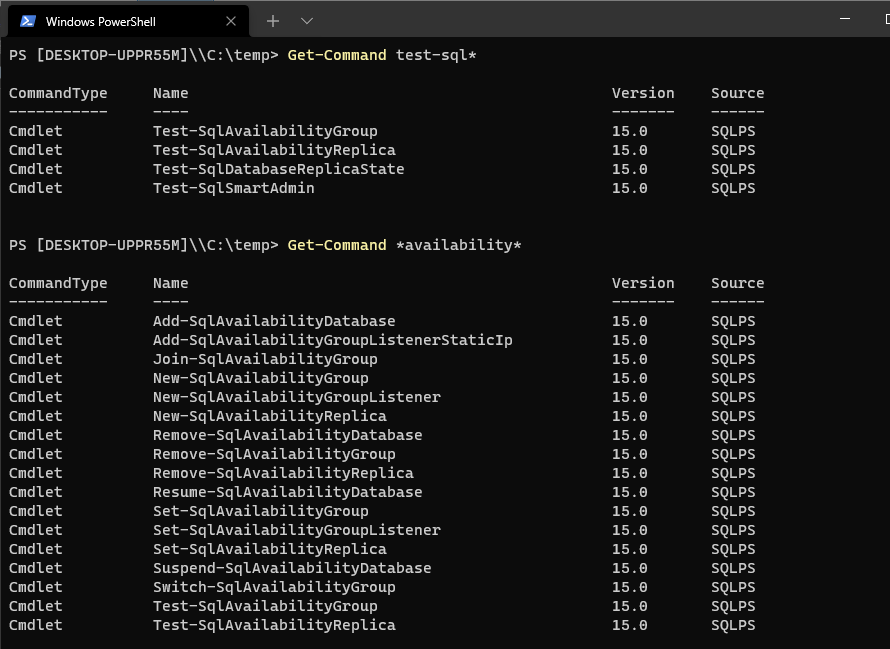

Get-Command in PowerShell

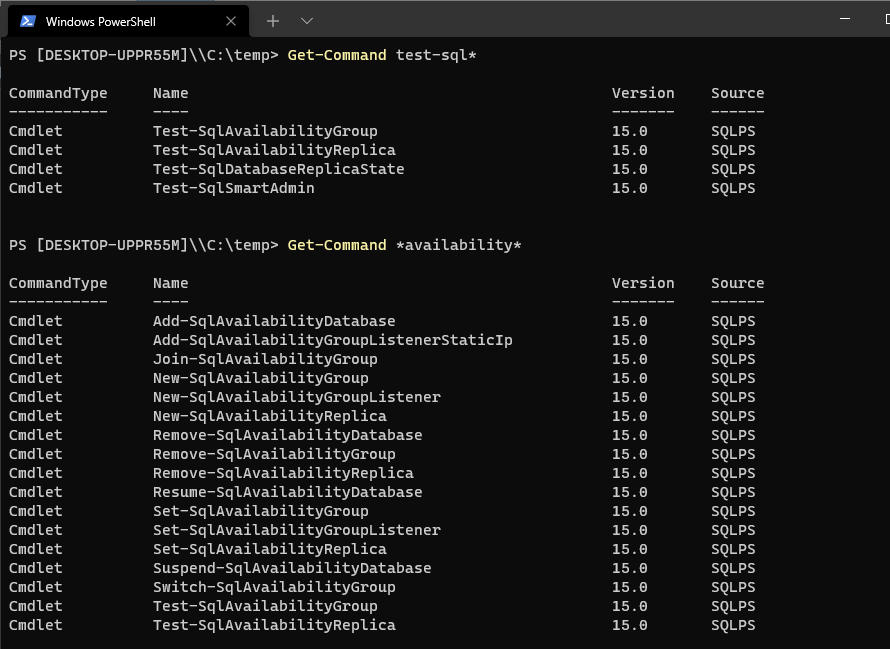

Get-Commandis a cmdlet in PowerShell that lets you retrieve a list of all available commands in your current session. It’s a go to tool for discovering new commands and understanding their usage and capabilities.You can use

Get-Commandwith wildcards to filter results based on patterns. This is particularly useful when you’re not sure of a command’s exact name but know part of it.# get-command, better use wildcards * Get-Command test-sql*

We can list commands that belong to a specific PowerShell module, or that have a specific verb or noun in their name. For example, run

Get-Command Test-*to retrieve all PowerShell cmdlets with the ‘Test’ prefix.This was just a quick post / note of a SQL PowerShell

get-commandcmdlet example. Hope it was useful!