This is a post on how to help manage S3 Buckets with AWS CLI, and to help explain some local Operating System (OS) considerations when running such commands.

First of all, you will need to be authenticated to your AWS Account and have AWS CLI installed. I cover this in previous blog posts:

> How to Install and Configure AWS CLI on Windows

> How to Install and Configure AWS CLI on Ubuntu

I’m more often involved in the PowerShell side rather than Linux. AWS CLI commands do the same thing in both environments, but the native (OS) language is used around it for manipulating data for output and for other things like wrapping commands in a For Each loop. All commands in this post can run on either OS.

PowerShell is cross-platform and has supported various Linux & DOS commands since its release. Some are essential for everyday use, for example, ping, cd, ls, mkdir, rm, cat, pwd and more. There are more commands being added over time like tar and curl which is good to see. Plus, we have WSL to help integrate non-supported Linux commands.

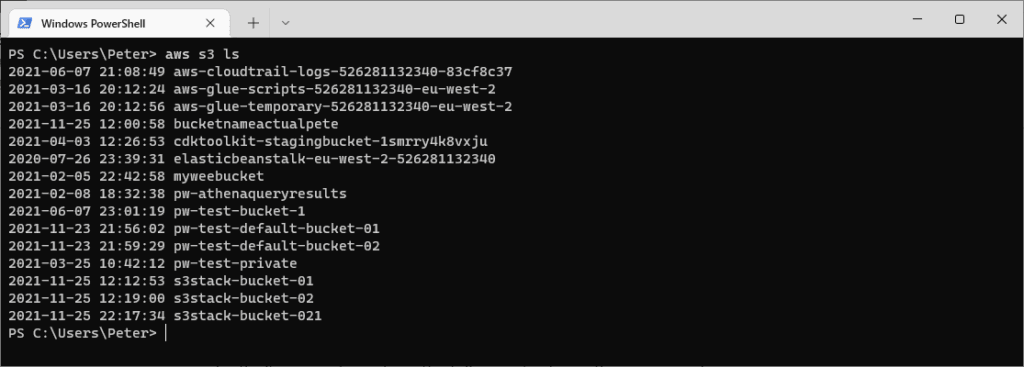

Here’s one of the simplest examples which list all S3 buckets the executing IAM User owns within your AWS Account.

# List all buckets in an AWS Account aws s3 ls

The Default Region is configured during the AWS CLI Configuration as linked above. We can change this by running aws configure set region or configure your Environment Variables. Alternatively, we can pass in the –Region variable after ‘ls’ in the command to get a specific Region’s S3 Buckets. There are more ways for us to run commands across multiple Regions which I hope to cover another day.

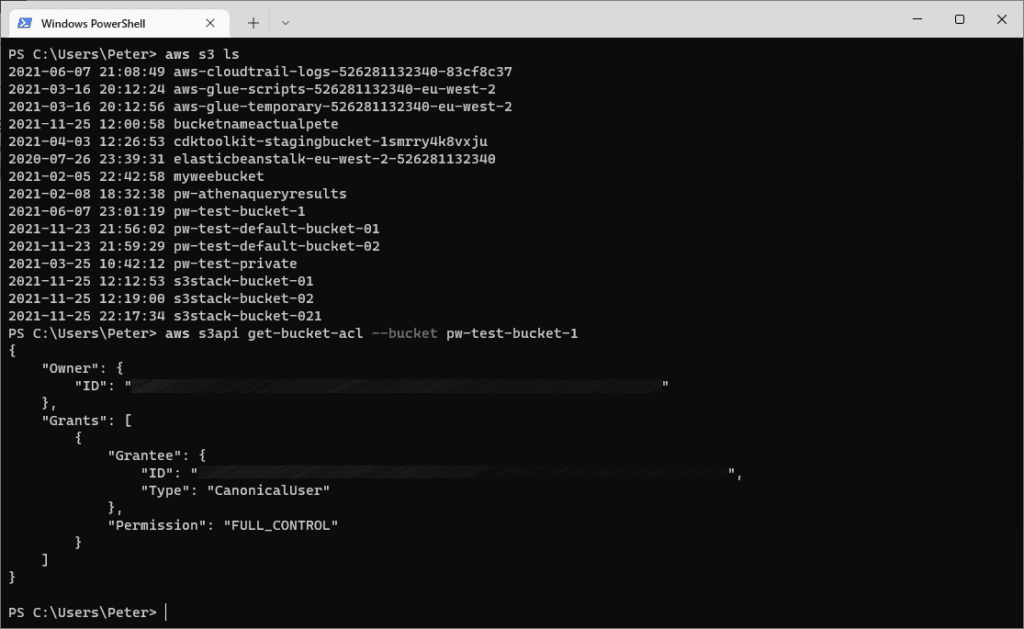

Now I’m going to run a command to show me the Access Control List (ACL) of the bucket, using the list of Bucket Names I ran in the previous command. This time, I’m using the s3api command rather than s3 – look here for more information on the differences between them. When running AWS CLI commands these API docs will always help you out.

# Show S3 Bucket ACL aws s3api get-bucket-acl --bucket my-bucket

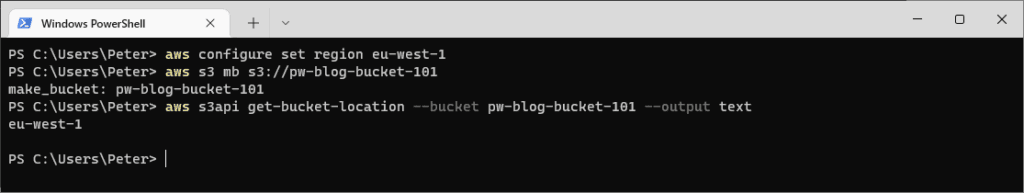

Next up, I’m going to create a bucket using the s3 command rather than s3api. The reason I’m doing this is, I want to rely on my Default Region for the new S3 Bucket, rather than specifying it within the command. Here’s AWS’s explanation of this –

“Regions outside of us-east-1 require the appropriate LocationConstraint to be specified in order to create the bucket in the desired region – “

–create-bucket-configuration LocationConstraint=eu-west-1

AWS API Docs

The following command is creating a new S3 Bucket in my Default Region and I’m verifying the location with get-bucket-location afterwards.

# Change AWS CLI Default Region aws configure set region eu-west-1 # Create a new S3 Bucket in your Default Region aws s3 mb s3://pw-blog-bucket-101 # Check the Region of a S3 Bucket aws s3api get-bucket-location --bucket pw-blog-bucket-101 --output text

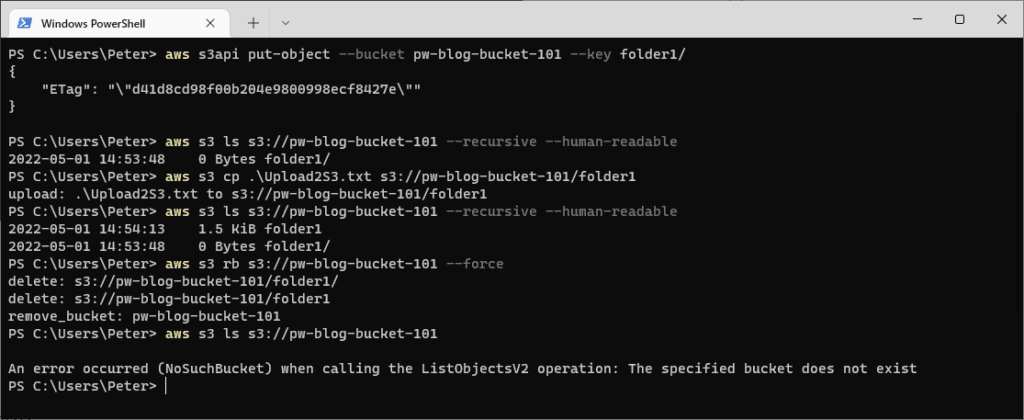

And finally, to finish this off I’m going to:

– Create a folder (known as Object) within the new Bucket.

– List items in the S3 Bucket.

– Copy a file from my desktop into the folder.

– List items in the S3 Bucket.

– Delete the Bucket.

# Create folder/object within a S3 Bucket aws s3api put-object --bucket pw-blog-bucket-101 --key folder1/ # Show objects within S3 Bucket aws s3 ls s3://pw-blog-bucket-101 --recursive --human-readable # Copy a local file into the folder above aws s3 cp .\Upload2S3.txt s3://pw-blog-bucket-101/folder1 # Show objects within S3 Bucket aws s3 ls s3://pw-blog-bucket-101 --recursive --human-readable # Delete the S3 Bucket aws s3 rb s3://pw-blog-bucket-101 # List the S3 Bucket above (expect error) aws s3 ls s3://pw-blog-bucket-101

Leave a Reply